How IPFS Works in NFT Ecosystem

Introduction to IPFSIPFS is a peer-to-peer hypermedia protocol designed to preserve and grow humanity’s knowledge by making the web upgradable, resilient and, more open.

RSS for Next.js

What is an RSS feedRSS(RDF Site Summary or Really Simple Syndication) feed is an XML file for subscribers to fetch recently updated content. It is a specific page on a website(usually /rss.xml) which returns all, or part of a website’s content in a listed structured XML format.

Why do you need an RSS feedRSS feed is an important part of any website that has frequent updates. With RSS, your subscribers can easily get your new update and have immediate interaction on it.

How to create rss feed in ...

Learning From Failure

This is a brief note on Postmortem Culture: Learning from Failure

The cost of failure is education - Devin Carraway

The postmortem concept is well known in technology industries, it’s a written record of an indicent and its impact, the actions taken to mitigate or resolve the issue, the root-causes and the follow-up actions to prevent likelihood of recurring.

The primary goals of writing a postmortem are to ensure that the incident is documented, that all contributing root causes are well unde ...

Rules for My Code to Be Reviewed

I’ve read a post How to Make Your Code Reviewer Fall in Love with You and found some rules for myself.

One rule to rules all rules.

Value your reviewer’s time.

Rule 1Review my code first before sending request to my teammates. And do not just check mistakes, imagine that I’m reading it the first time and what might lead myself into a muddle.

Rule 2Write a clear changelist description. A good changelist explains what the change achieves and why they should be changed. Remember that the changeli ...

The Elements of Documentation

This is a glimpse of Art of Open Source Documentation

Documentation helps your users succeed with your software, empowers them to be self-sufficient, enables them to give further feedback, and is the organizational backbone of your project.

There’re three elements of a good documentation

WHAT problem your project solves

If people don’t know why your project exists, they won’t use it.

WHY your project values

If your project cannot offer special and unique value, people won’t use it.

HOW ...

Understanding Financial Markets

Module 1: General Introduction and Key Concepts1.1 Investment Management in a nutshellInvestment management is about managing risk!

The key to successful Investment Management is to constantly be aware of the underlying risks:

Country RiskA country can be subject to a political problem at some point, and so the authorities, the government decides to shut the borders. And also there’s some restriction on captical movements.

Market Risk

Currency Risk

Liquidity RiskIf you’re investing in what w ...

Wallet Auth RFC

AbstractThis specification describes the authorization and authentication process between security-sensitive wallets and untrusted applications.

Since key-related resources should not be accessible to any untrusted applications, such as a website, until the user grants specific permissions on it, most of the endpoints should be guarded by an authentication system.

This specification defines some aspects of the authentication system, including:

Depiction of permission;

Two JSON-RPC methods to ge ...

Shorthand of Wallet Permissions System

EIP 2255

SummaryA proposed standard interface for restricting and permitting access to security-sensitive methods within a restricted web3 context like a website or “dapp”.

Many web3 applications today begin their sessions with a series of repetitive requests:

Reveal your wallet address to this site

Switch to a preferred network

Sign a cryptographic challenge

Grant a token allowance to our contract

Send a transaction to our contract

Many of these can be generalized into a set of human-readable ...

Type Id on CKB

What is Type IdType Id is a digest of a unique type script.

The unique type script consists of three parts:

123code_hash: type id contract code hashhash_type: type # use the `type` kind code hash for upgradabilityargs: hash(this_transaction.inputs[0]) | output_index_of_this_cell

The uniqueness of the generated type script is guaranteed by the type id contract and hash(this_transaction.input[0]) | output_index_of_this_cell since

No man ever steps in the same river twice, for it’s not the same r ...

Shorthand of Ethereum Provider

EIP 1193

SummaryA JavaScript Ethereum Provider API for consistency across clients and applications.

This EIP formalizes an Ethereum Provider API to promote wallet interoperability. The API is designed to be minimal, event-driven, and agonstic of transport and RPC protocols

It’s functionality is easily extended by defining new RPC methods and message event types

Terminology

Provider: a JavaScript object made available to a consumer, that provides access to Ethereum by menas of Clients

e.g. window ...

Inspect-protobuf.js

Start in protobuf

Protocol Buffers are a language-neutral, platform-neutral, extensible way of serializing structured data.

Basic usageUsing JSON descriptors12345678910111213// awesome.json{ "nested": { "AwesomeMessage": { "fields": { "awesomeField": { "type": "string", "id": 1 } } } }}

Type(T)

Extends

Type-specific properti ...

Crx-Config

This is a detailed customized chrome extension configuration cheatsheet.

Manifest123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172737475767778798081828384858687888990919293949596979899100101102103104105106107108109110111{ // required "manifest_version": 2, // integer indicates the version of the manifest file format. "name": "Name of Extension", "version": " ...

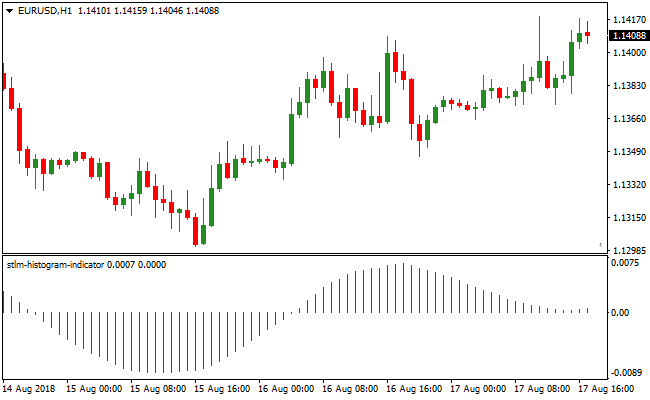

Introduction to MACD

Moving Average Convergence Divergence(MACD) is a market indicator on trending momentum to show the relationshops between two moving averages of a security’ price.

The MACD is calculated bey subtracting the long-term Exponential Moving Average(EMA) from the short-term EMA.

The display of MACD is the MACD line, and a nine-day EMA of the MACD called the ‘signal line’, is then plotted on the top of MACD line. It functions as a trigger of buying or selling. Traders could buy the security when the MAC ...

Basic Components in nest.js

ModuleA module in a nest.js application is used to organize related controllers and service providers into a single logical file.

Simply use the @Module decorator with metadata to the module class to designate what controllers, service providers, and other related resources will be instantiated and used later.

Nest also ships a @Global decorator to allow the module to be shared with other module implicitly.

And nest module system has a feature called Dynamic modules, which enable you to produce ...

Snippet of GitHub Actions

Core Conceptions for GitHub Actions

Workflow

A configurable automated process

Workflow run

An instance of the workflow that runs when the pre-configured event occurs

Workflow file

The YAML file that defines the workflow configuration with at least one job.

Job

A defined task made up of steps. Each job is run in a fresh instance of the virtual environement.

Step

A step is a set of tasks performed by a job. Each step in a job executes in the same virtual environment, allowing the actions ...

Methods of the String in JavaScript

String.prototype.charAt(index: number): string: Returns the character(exactly one UTF-16 code unit) at the specified index.

1'hello world'.charAt(1) // returns 'e'

String.prototype.charCodeAt(index: number): number: Returns a number that is the UTF-16 code unit value at the specified index.

1'hello world'.charCodeAt(1) // returns 101

String.prototype.codePointAt(index: string): number: Returns a nonnegative integer Number that is the code point value of the UTF-1 ...

Note on Bip 173

wiki

AbstractBIP 173 proposed a checksummed base32 format, Bech32 and a standard for native segregated witness output addresses using it to replace BIP 142. This format is not required for using segwit, but is more efficient, flexible and nicer to use.

Bech32A Bech32 string is at most 90 characters long and consists of:

The human-readable part(hrp), which is intended to convey the type of data, or anything else that is relevant to the reader.

The separator which is always “1”. In case “1” is a ...

[WIP]notes of Building Product, Talking to Users and Growing

original notes

You need a lot of feedback. Maybe you get a lot of people to your site, but no one sticks around because you didn’t get that initial user feedback.

When you have an idea, you should really think about what the idea is really solving. Like what is the actual problem. You should describe your problem in one sentence, and then you should think.

When you have a problem and are able to state it, you should think about

Keystore

Original

What is a keystore fileA keystore file is en encrypted version of your unique private key that you will use to sign your transactions. If you lose this file your lose your assets.

What do keystore files look like1234567891011121314151617181920{ "crypto": { "cipher": "aes-128-ctr", "cipherparams": { "iv": "83dbcc02d8ccb40e466191a123791e0e" }, "ciphertext": "d172bf743a674da9cda ...

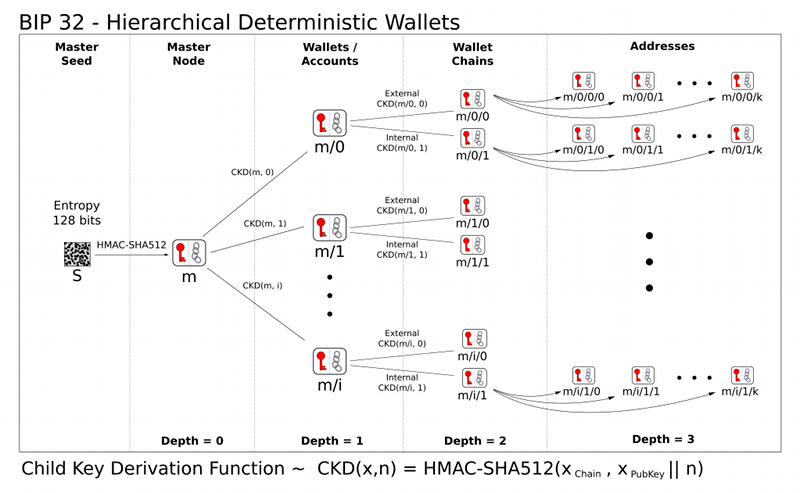

Note on Bip 32

wiki

The specification consists of two parts:

a system for deriving a tree of keypairs from a single seed.

demostrate how to build a wallet structure on top of such a tree.

Specification: Key derivationConventionIn this text we assume the public key cryptography used in Bitcoin, namely elliptic curve cryttography using the field and curve parameters defined by secp256k1. Variables below are either:

Integers modulo the order of the curve (referred to as n)

Coordinates of points on the curve ...